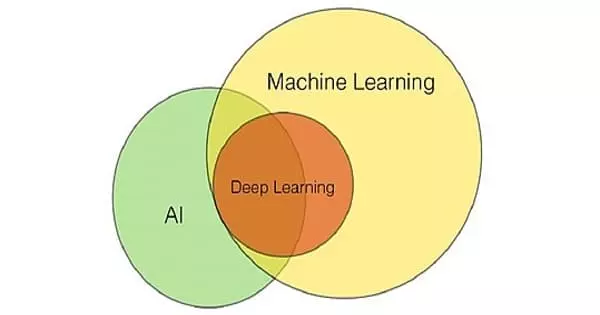

Machine learning (ML) is an Artificial Intelligence (AI) application that allows the system to automatically learn and improve from experience rather than explicit programming. This is possible because large amounts of data are now available, allowing machines to be trained rather than programmed. It is regarded as a major technological revolution capable of analyzing massive amounts of data.

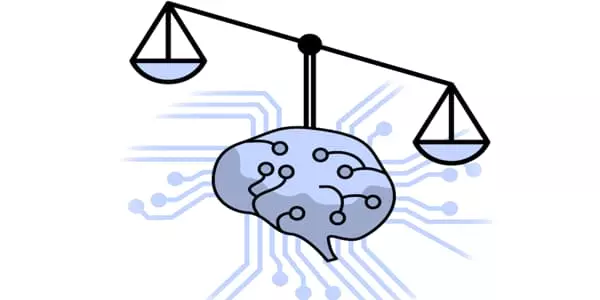

When using machine learning to make public policy decisions, researchers are challenging a long-held assumption that there is a trade-off between accuracy and fairness. Almost everything that comes out of the technology world these days appears to contain some form of artificial intelligence or machine learning. Today, machine learning is used to implement some aspects of human abilities, but not the entire potential for human intelligence. By collaborating with smart software, ML enables people to accomplish more. It is like putting more human face to technology.

Researchers at Carnegie Mellon University are challenging a long-held belief that when using machine learning to make public policy decisions, there is a trade-off between accuracy and fairness.

We want the artificial intelligence, computer science, and machine learning communities to stop accepting the assumption that there is a trade-off between accuracy and fairness and to start designing systems that intentionally maximize both.

Professor Rayid Ghani

As the use of machine learning in areas such as criminal justice, hiring, health care delivery, and social service interventions has grown, there has been growing concern about whether such applications introduce new or amplify existing inequities, particularly among racial minorities and people with low income. Adjustments are made to the data, labels, model training, scoring systems, and other aspects of the machine learning system to mitigate this bias. The underlying theoretical assumption is that these adjustments reduce the accuracy of the system.

In a new study published in Nature Machine Intelligence, a CMU team seeks to dispel that assumption. Rayid Ghani, a professor in the School of Computer Science’s Machine Learning Department (MLD) and the Heinz College of Information Systems and Public Policy, Kit Rodolfa, a research scientist in MLD, and Hemank Lamba, a postdoctoral researcher in SCS, tested that assumption in real-world applications and discovered that the trade-off was negligible in practice across a variety of policy domains.

Machine learning is changing the world by transforming industries such as healthcare, education, transportation, food, entertainment, and various assembly lines, among others. It will have an impact on almost every aspect of people’s lives, including housing, cars, shopping, food ordering, and so on. Technologies such as the Internet of Things (IoT) and cloud computing are all increasing the use of ML to make objects and gadgets “smart” for themselves. Companies attempting to leverage big data for customer satisfaction may find ML useful. The hidden pattern buried in the data can be extremely beneficial to businesses.

“You can, in fact, have both. It is not necessary to sacrifice accuracy in order to create systems that are fair and equitable “Ghani stated. “However, it does necessitate deliberate system design in order to be fair and equitable. Off-the-shelf systems will not suffice.”

Ghani and Rodolfa concentrated on situations in which in-demand resources are limited and machine learning systems are used to assist in resource allocation. The researchers examined four systems: prioritizing limited mental health care outreach based on a person’s risk of returning to jail to reduce reincarceration; predicting serious safety violations to better deploy a city’s limited housing inspectors; modeling the risk of students not graduating from high school in time to identify those most in need of additional support, and assisting teachers in meeting crowdfunding goals for classroom needs.

The researchers discovered that models optimized for accuracy – standard practice in machine learning – could effectively predict the outcomes of interest in each context, but there were significant disparities in recommendations for interventions. However, when the researchers made changes to the models’ outputs to improve their fairness, they discovered that disparities based on race, age, or income – depending on the situation – could be removed without sacrificing accuracy.

Ghani and Rodolfa hope that their findings will influence the thinking of other researchers and policymakers who are considering the use of machine learning in decision-making. “We want the artificial intelligence, computer science, and machine learning communities to stop accepting the assumption that there is a trade-off between accuracy and fairness and to start designing systems that intentionally maximize both,” Rodolfa said. “We hope that policymakers will use machine learning as a decision-making tool to help them achieve equitable outcomes.”