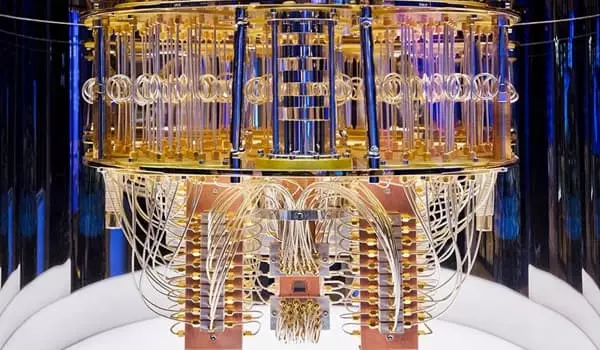

The University of Helsinki, Aalto University, the University of Turku, and IBM Research Europe-Zurich are working together to advance quantum computing. The researchers devised a method to reduce the number of calculations required to read data stored in the state of a quantum processor. As a result, quantum computers will become more efficient, faster, and, ultimately, more sustainable.

Bits — 1s and 0s — are used in traditional computers to store data and run programs. Importantly, these do not interact. Qubits, on the other hand, are used in quantum computers. Qubits exist in a state of superposition between 0 and 1 and observing them forces them to choose between the two.

Each qubit has an amplitude, which is a complex number similar to a probability but does not follow the same rules. Because of the nature of amplitude values, qubits can interact constructively or destructively, which we call quantum interference.

Quantum computers have the potential to solve important problems that are beyond the capabilities of even the most powerful supercomputers, but they necessitate an entirely new approach to programming and algorithm development.

We maximize the value of each sample by combining all data generated. Simultaneously, we fine-tune the measurement to produce highly accurate estimates of the quantity under study, such as the energy of an interesting molecule. We can reduce the expected runtime by several orders of magnitude by combining these ingredients.

Garca-Pérez.

Universities and major technology firms are leading research into how to create these new algorithms. A team of researchers from the University of Helsinki, Aalto University, the University of Turku, and IBM Research Europe-Zurich recently developed a new method for speeding up calculations on quantum computers. The findings were published in the American Physical Society’s journal PRX Quantum.

Quantum computers have the potential to accelerate drug discovery and development, giving scientists the ability to solve problems that are currently intractable. Because of their extremely high processing power, these machines will be able to simultaneously review multiple molecules, proteins, and chemicals using quantum simulation something that a standard computer cannot do allowing drug options to be developed faster and more effectively than currently possible.

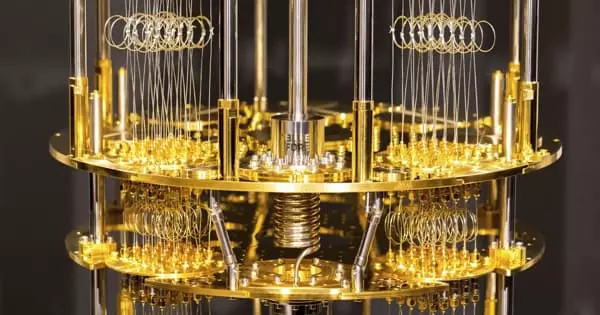

Unlike traditional computers, which use bits to store ones and zeros, information in a quantum processor is stored in the form of a quantum state, or a wavefunction, according to the paper’s first author, postdoctoral researcher Guillermo Garca-Pérez from the Department of Physics at the University of Helsinki.

Reading data from quantum computers thus necessitates the use of specialized procedures. Quantum algorithms also necessitate a set of inputs, such as real numbers, as well as a list of operations to be performed on some reference initial state.

Because the quantum state used is generally impossible to reconstruct on conventional computers, useful insights must be extracted by performing specific observations (referred to as measurements by quantum physicists), says Garca-Pérez.

The issue here is the large number of measurements required for many popular quantum computer applications (like the so-called Variational Quantum Eigensolver, which can be used to overcome important limitations in the study of chemistry, for instance in drug discovery). Even if only partial information is required, the number of calculations required is known to grow very quickly with the size of the system being simulated. This makes scaling the process difficult, slowing down the computation and consuming a large number of computational resources.

Garca-Pérez and co-authors’ method employs a generalized class of quantum measurements that are adopted throughout the calculation to efficiently extract the information stored in the quantum state. This drastically reduces the number of iterations required to obtain high-precision simulations, as well as the time and computational cost associated with them.

The method is capable of reusing previous measurement results and adjusting its own settings. Subsequent runs become more accurate, and the collected data can be reused to calculate other system properties without incurring additional costs.

We maximize the value of each sample by combining all data generated. Simultaneously, we fine-tune the measurement to produce highly accurate estimates of the quantity under study, such as the energy of an interesting molecule. We can reduce the expected runtime by several orders of magnitude by combining these ingredients, says Garca-Pérez.

One of the secrets to quantum computers’ incredible power is quantum interference. If you can tailor an algorithm to solve a specific computational problem by taking advantage of quantum interference (i.e., building it in a way that increases the chance of hitting the right answer), you end up with an efficient solution for computational problems that a conventional machine may never be able to solve.