Nobody, including self-driving cars, enjoys driving in a blizzard. Engineers look at the problem from the car’s perspective to make self-driving cars safer on snowy roads. Navigating bad weather is a significant challenge for fully autonomous vehicles. Snow, in particular, muddles critical sensor data that assists a vehicle in gauging depth, detecting obstacles, and staying on the correct side of the yellow line, assuming it is visible. With more than 200 inches of snow on average each winter, Michigan’s Keweenaw Peninsula is the ideal location for testing autonomous vehicle technology.

Researchers from Michigan Technological University discussed solutions for snowy driving scenarios in two papers presented at SPIE Defense + Commercial Sensing 2021, which could help bring self-driving options to snowy cities such as Chicago, Detroit, Minneapolis, and Toronto.

Autonomy, like the weather at times, is not a yes-or-no proposition. Autonomous vehicles range in level from cars that already have blind spot warnings or braking assistance to those that can switch in and out of self-driving modes and those that can navigate entirely on their own. Automakers and research institutions are still fine-tuning self-driving technology and algorithms. Occasionally accidents occur, either due to a misjudgment by the car’s artificial intelligence (AI) or a human driver’s misuse of self-driving features.

Researchers discuss solutions for snowy driving scenarios that could help bring self-driving options to snowy cities like Chicago, Detroit, Minneapolis, and Toronto.

Humans, too, have sensors: our scanning eyes, sense of balance and movement, and brain processing power help us understand our surroundings. Because human brains are good at generalizing novel experiences, these seemingly simple inputs allow us to drive in virtually any scenario, even if it is unfamiliar to us. In autonomous vehicles, two cameras mounted on gimbals scan and perceive depth using stereo vision to mimic human vision, while an inertial measurement unit measures balance and motion. However, computers can only respond to scenarios that they have previously encountered or have been programmed to recognize.

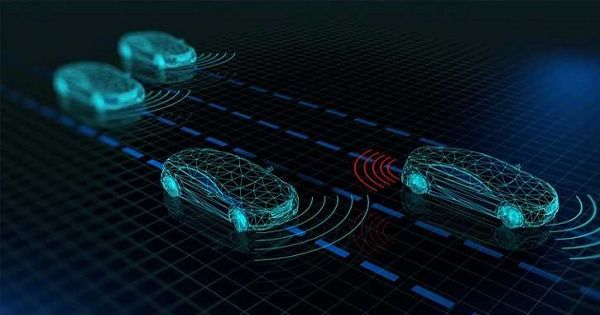

Because artificial brains aren’t yet available, task-specific artificial intelligence (AI) algorithms must drive autonomous vehicles, which necessitates the use of multiple sensors. Fisheye cameras provide a wider field of view, whereas other cameras function similarly to the human eye. Infrared detects heat signatures. Radar is capable of seeing through fog and rain. Lidar (light detection and ranging) pierces the darkness and weaves a neon tapestry of laser beam threads.

“Every sensor has limitations, and every sensor covers another sensor’s back,” said Nathir Rawashdeh, assistant professor of computing in the College of Computing at Michigan Tech and one of the study’s leads researchers. He works on bringing the data from the sensors together using an AI process known as sensor fusion.

“To understand a scene, sensor fusion uses multiple sensors of different modalities,” he explained. “When the inputs have difficult patterns, it is impossible to program exhaustively for every detail. That is why we require AI.”

Nader Abu-Alrub, a doctoral student in electrical and computer engineering, and Jeremy Bos, an assistant professor of electrical and computer engineering, are among Rawashdeh’s Michigan Tech collaborators, as are master’s degree students and graduates from Bos’ lab: Akhil Kurup, Derek Chopp, and Zach Jeffries. According to Bos, lidar, infrared, and other sensors on their own are analogous to the hammer in an old adage. “‘Everything looks like a nail to a hammer,” said Bos. “Well, you have more options if you have a screwdriver and a rivet gun.”

The majority of autonomous sensors and self-driving algorithms are being developed in sunny, open environments. Bos’s lab began collecting local data in a Michigan Tech autonomous vehicle (safely driven by a human) during heavy snowfall, knowing that the rest of the world is not like Arizona or southern California. Rawashdeh’s team, led by Abu-Alrub, analyzed over 1,000 frames of lidar, radar, and image data from snowy roads in Germany and Norway to begin teaching their AI program what snow looks like and how to see past it.

“Not all snow is created equal,” Bos said, noting that the variety of snow makes sensor detection difficult. Rawashdeh went on to say that pre-processing the data and ensuring accurate labeling is a critical step in ensuring accuracy and safety: “AI is like a chef—if you have good ingredients, you’ll have an excellent meal,” he explained. “Give dirty sensor data to the AI learning network, and you’ll get a bad result.”

One issue is low-quality data, but another is actual dirt. Snow buildup on the sensors, like road grime, is a solvable but inconvenient issue. Even when the view is clear, autonomous vehicle sensors do not always agree on detecting obstacles. Bos provided an excellent example of discovering a deer while cleaning up locally collected data. The lidar said it was nothing (30% chance of being an obstacle), the camera saw it as a sleepy human behind the wheel (50%), and the infrared sensor said WHOA! (90 percent sure that is a deer).

Getting the sensors and their risk assessments to communicate and learn from one another is analogous to the Indian parable of three blind men who find an elephant: each touches a different part of the elephant—the creature’s ear, trunk, and leg—and arrives at a different conclusion about what kind of animal it is. Rawashdeh and Bos want autonomous sensors to collectively figure out the answer—whether it’s an elephant, a deer, or a snowbank—using sensor fusion. “Rather than strictly voting,” says Bos, “we will come up with a new estimate by using sensor fusion.”

While navigating a Keweenaw blizzard is a long way off for self-driving cars, their sensors can improve at learning about bad weather and, with advances like sensor fusion, will be able to drive safely on snowy roads one day.