Researchers have created a control framework that allows robots to understand what it means to help or hinder one another, as well as incorporate social reasoning into the tasks they are performing. Even the most sophisticated robot cannot perform basic social interactions that are critical to everyday human life, such as delivering food on a college campus or hitting a hole in one on the golf course.

Researchers at MIT have now incorporated certain social interactions into a robotics framework, allowing machines to understand what it means to help or hinder one another and to learn to perform these social behaviors on their own. In a simulated environment, a robot observes its companion, guesses what task it wants to complete, and then assists or hinders this other robot based on its own objectives.

In addition, the researchers demonstrated that their model generates realistic and predictable social interactions. When they showed human viewers videos of these simulated robots interacting with one another, the human viewers mostly agreed with the model about the type of social behavior that was taking place.

Robots will soon be a part of our world, and they will need to learn how to communicate with us on human terms. This is preliminary research, and we are only scratching the surface, but I believe this is the first serious attempt to understand what it means for humans and machines to interact socially.

Boris Katz

Allowing robots to exhibit social skills could result in more pleasant and positive human-robot interactions. A robot in an assisted living facility, for example, could use these capabilities to help create a more caring environment for elderly people. The new model may also allow scientists to quantify social interactions, which could aid psychologists in studying autism or analyzing the effects of antidepressants.

“Robots will soon be a part of our world, and they will need to learn how to communicate with us on human terms. They must understand when it is appropriate to assist and when it is appropriate to consider what they can do to prevent something from occurring. This is preliminary research, and we are only scratching the surface, but I believe this is the first serious attempt to understand what it means for humans and machines to interact socially” Boris Katz, principal research scientist and head of the InfoLab Group at the Computer Science and Artificial Intelligence Laboratory (CSAIL), and a member of the Center for Brains, Minds, and Machines, agrees (CBMM).

Co-lead author Ravi Tejwani, a research assistant at CSAIL, co-lead author Yen-Ling Kuo, a CSAIL Ph.D. student, Tianmin Shu, a postdoc in the Department of Brain and Cognitive Sciences, and senior author Andrei Barbu, a research scientist at CSAIL and CBMM, also contributed to the paper. The findings will be presented at the Robot Learning Conference.

A social simulation

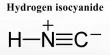

The researchers created a simulated environment in which robots pursue physical and social goals as they move around a two-dimensional grid to study social interactions. A physical goal is one that is related to the environment. A robot’s physical goal, for example, could be to navigate to a tree at a specific point on the grid. A social goal entails assuming what another robot is attempting to do and then acting on that assumption, such as assisting another robot in watering a tree.

The researchers use their model to specify a robot’s physical goals, social goals, and how much emphasis it should place on one over the other. The robot is rewarded for actions that bring it closer to achieving its objectives. If a robot is attempting to assist its companion, it adjusts its reward to match the other robot’s; if it is attempting to hinder, it adjusts its reward to be the inverse. The planner, an algorithm that determines which actions the robot should take, uses this constantly updating reward to guide the robot to accomplish a combination of physical and social goals.

“We’ve created a new mathematical framework for modeling social interaction between two agents. If you are a robot and want to go to location X, and I am another robot and see that you are attempting to go to location X, I can help you get there faster. This could mean bringing X closer to you, finding a better X, or taking whatever action you needed to take at X. Our formulation enables the plan to discover the ‘how;’ we specify the ‘what’ mathematically in terms of what social interactions mean “Tejwani adds.

It is critical to combine a robot’s physical and social goals in order to create realistic interactions because humans who help one another have limits to how far they will go. A rational person, for example, would not simply hand a stranger their wallet, according to Barbu.

This mathematical framework was used by the researchers to define three types of robots. A level 0 robot can only reason in terms of physical goals and cannot reason socially. A level 1 robot has both physical and social goals, but all other robots are assumed to have only physical goals. Level 1 robots can help and hinder other robots by taking actions based on their physical goals. A level 2 robot assumes other robots have social and physical goals; these robots can take more sophisticated actions like joining in to help together.

Evaluating the model

They created 98 different scenarios with robots at levels 0, 1, and 2 to see how their model compared to human perspectives on social interactions. Twelve humans were asked to estimate the physical and social goals of the robots after watching 196 video clips of them interacting. In the majority of cases, their model agreed with what humans thought about the social interactions taking place in each frame.

“We have a long-term interest in both developing computational models for robots and delving deeper into the human aspects of this. We want to know which aspects of these videos humans use to understand social interactions. Can we create a standardized test to assess your ability to recognize social interactions? Perhaps there is a way to train people to recognize these social interactions and improve their skills. We’re a long way from there, but even being able to effectively measure social interactions is a big step forward “According to Barbu.

Toward greater sophistication

The researchers are working on creating a system with 3D agents in an environment that allows for a broader range of interactions, such as the manipulation of household objects. They also intend to revise their model to include scenarios in which actions may fail.

In addition, the researchers want to incorporate a neural network-based robot planner into the model, which learns from experience and performs faster. Finally, they hope to conduct an experiment to collect data on the features humans use to determine whether two robots are interacting socially.

“Hopefully, we’ll have a benchmark that will allow all researchers to work on these social interactions and inspire the kinds of scientific and engineering advances we’ve seen in other areas like object and action recognition,” Barbu says.