Researchers from UTSA, UCF, the Air Force Research Laboratory (AFRL), and SRI International have developed a new method for improving how artificial intelligence learns to see.

The team, led by Sumit Jha, professor in the Department of Computer Science at UTSA, has altered the traditional approach used in explaining machine learning decisions, which relies on a single injection of noise into the input layer of a neural network.

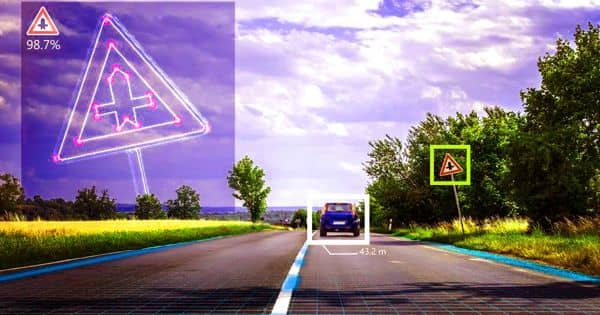

The researchers demonstrate that adding noise – also known as pixilation – across multiple layers of a network provides a more robust representation of an image recognized by AI and creates more robust explanations for AI decisions. This work contributes to the development of “explainable AI,” which aims to enable high-assurance AI applications such as medical imaging and autonomous driving.

Researchers have developed a new method that improves how artificial intelligence learns to see.

“It’s about putting noise in every layer,” Jha explained. “In all of its internal layers, the network is now forced to learn a more robust representation of the input. If each layer experiences more perturbations in each training, the image representation will be more robust, and the AI will not fail simply because a few pixels of the input image are changed.”

Many business applications rely on computer vision, or the ability to recognize images. Computer vision can help cancer patients’ livers and brains identify areas of concern. This type of machine learning can be used in a variety of other industries. It can be used by manufacturers to detect defection rates, by drones to detect pipeline leaks, and by agriculturists to detect early signs of crop disease in order to improve yields.

Deep learning trains a computer to perform tasks such as speech recognition, image recognition, and prediction. Deep learning works within basic parameters about a data set and trains the computer to learn on its own by recognizing patterns using many layers of processing, as opposed to organizing data to run through set equations.

The team’s work, led by Jha, represents a significant advancement over previous work he’s done in this field. Jha, his students, and colleagues from Oak Ridge National Laboratory demonstrated how poor environmental conditions can lead to dangerous neural network performance in a 2019 paper presented at the AI Safety workshop co-located with that year’s International Joint Conference on Artificial Intelligence (IJCAI). A computer vision system was asked to identify a minivan on the road, and it did so successfully. His team then added a small amount of fog and asked the network the same question again: the AI identified the minivan as a fountain. As a result, their paper was selected as the best paper candidate.

In most neural ordinary differential equations (ODE) models, a machine is trained with one input through one network and then spreads through the hidden layers to produce one response in the output layer. This group of researchers from UTSA, UCF, AFRL, and SRI employs a more dynamic approach known as stochastic differential equations (SDEs). Using the connection between dynamical systems to demonstrate that neural SDEs result in less noisy, visually sharper, and quantitatively robust attributions than neural ODEs.

Due to the injection of noise in multiple layers of the neural network, the SDE approach learns not from a single image but from a set of nearby images. Because the model created at the start is based on evolving image characteristics and/or conditions, as more noise is injected, the machine will learn evolving approaches and find better ways to make explanations or attributions. It outperforms several other attribution methods, such as saliency maps and integrated gradients.

The paper “On Smoother Attributions Using Neural Stochastic Differential Equations” by Jha describes his new research. Richard Ewetz of UCF, Alvaro Velazquez of AFRL, and Sumit Jha of SRI are among those who contributed to this novel approach. The Defense Advanced Research Projects Agency, the Office of Naval Research, and the National Science Foundation all contribute to the lab’s funding. Their findings will be presented at the 2021 IJCAI, a conference with a 14 percent acceptance rate. Facebook and Google have previously presented at this highly selective conference.

“I am delighted to share the fantastic news that our paper on explainable AI has just been accepted at IJCAI,” Jha added. “This is a big opportunity for UTSA to be part of the global conversation on how a machine sees.”