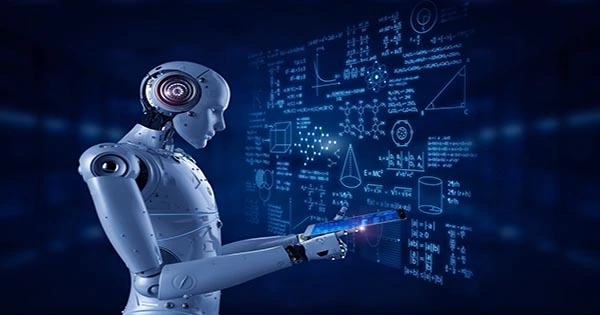

Technology has always been employed by geopolitical players to achieve their objectives. Artificial intelligence (AI), unlike previous technology, is much more than a tool. We don’t want to anthropomorphize AI or imply that it has its own goals. It isn’t a moral agent just yet.

However, it is quickly becoming a major factor of our collective fate. We think that AI is already challenging the foundations of global peace and security due to its unique traits — and its influence on other sectors, such as biotechnologies and nanotechnologies.

Because of the rapid pace of AI technology advancement and the breadth of new applications (the global AI industry is predicted to increase more than ninefold from 2020 to 2028), AI systems are being widely deployed without enough regulatory control or consideration of their ethical implications. This chasm, known as the pace problem, has rendered legislators and executive branches incapable of dealing with it.

After all, the consequences of new technology are frequently difficult to predict. Smartphones and social media were part of our everyday lives long before we realized how dangerous they could be if misused. Similarly, understanding the consequences of facial recognition technology for privacy and human rights breaches takes time.

Some nations will use AI to affect public opinion by selecting what information people see and restricting freedom of speech through monitoring. Looking ahead, we have no clue which of the present research issues will lead to inventions, or how those innovations will interact with one another and the rest of the environment.

These issues are particularly apparent in AI, because the methods used by learning algorithms to get their conclusions are sometimes opaque. It might be difficult, if not impossible, to pinpoint the cause of unfavorable consequences. It is impossible to assess and certify the safety of systems that are continually learning and changing their behavior.

Artificial intelligence (AI) systems can act with little or no human interaction. To anticipate perilous possibilities, one does not need to read a science fiction novel. Autonomous systems run the potential of undermining the idea that there should always be a human or corporate agent who can be held accountable for activities in the world, particularly when it comes to war and peace.

We can’t hold systems accountable, and those who deploy them will argue that they aren’t to blame when the systems behave erratically. In summary, we feel that human societies are not politically, legally, or ethically equipped for AI. The world is also unprepared for how AI will alter geopolitics and international relations ethically. This might happen in three ways, according to us.