A research team reported that they have discovered the method by which musical instincts originate from the human brain without the need for additional learning using an artificial neural network model. Music, sometimes known as the global language, is believed to be a common component in all cultures. Could ‘musical instinct’ be something that is shared to some extent throughout cultures, despite the vast contextual differences?

On January 16, a KAIST research team lead by Professor Hawoong Jung of the Department of Physics stated that they have discovered the mechanism by which musical instincts originate from the human brain without the need for specific learning, using an artificial neural network model.

Previously, numerous researchers attempted to determine the similarities and contrasts between music from many civilizations, as well as to comprehend the origins of universality. A 2019 research in Science demonstrated that music is produced in all ethnographically unique civilizations, with comparable beats and tunes used. Neuroscientists have previously discovered that a specific portion of the human brain, the auditory cortex, is responsible for processing musical information.

The results of our study imply that evolutionary pressure has contributed to forming the universal basis for processing musical information in various cultures.

Professor Hawoong Jung

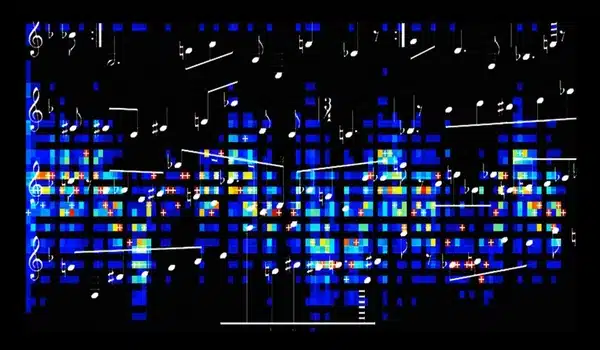

Professor Jung’s team utilized an artificial neural network model to demonstrate that cognitive capabilities for music emerge spontaneously as a result of processing auditory information acquired from nature, rather than being taught music. The research team used AudioSet, a large-scale collection of sound data given by Google, to train the artificial neural network to recognize the different sounds. Interestingly, the researchers noticed that particular neurons in the network model responded selectively to music. In other words, scientists observed the spontaneous formation of neurons that reacted weakly to other noises such as those of animals, nature, or machines while responding strongly to diverse forms of music, both instrumental and vocal.

The neurons in the artificial neural network model showed similar reactive behaviours to those in the auditory cortex of a real brain. For example, artificial neurons responded less to the sound of music that was cropped into short intervals and were rearranged. This indicates that the spontaneously-generated music-selective neurons encode the temporal structure of music. This property was not limited to a specific genre of music, but emerged across 25 different genres including classic, pop, rock, jazz, and electronic.

Furthermore, suppressing the activity of the music-selective neurons was found to greatly impede the cognitive accuracy for other natural sounds. That is to say, the neural function that processes musical information helps process other sounds, and that ‘musical ability’ may be an instinct formed as a result of an evolutionary adaptation acquired to better process sounds from nature.

Professor Hawoong Jung, who advised the research, stated, “The results of our study imply that evolutionary pressure has contributed to forming the universal basis for processing musical information in various cultures.” Regarding the relevance of the study, he said, “We look forward for this artificially built model with human-like musicality to become an original model for various applications including AI music generation, musical therapy, and for research in musical cognition.”

He also addressed its shortcomings, saying, “This research however does not take into consideration the developmental process that follows the learning of music, and it must be noted that this is a study on the foundation of processing musical information in early development.”