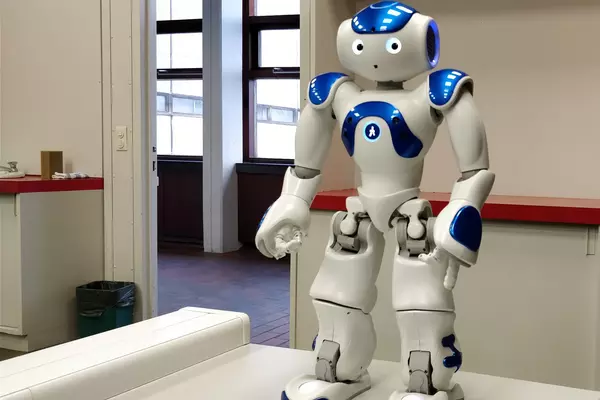

A virtual reality (VR) robot stand-in is a concept that integrates physical robotics with virtual settings to achieve a number of applications. Cornell and Brown University researchers have created a telepresence robot that responds automatically and in real time to a remote user’s virtual reality movements and gestures.

The VRoxy robotic system allows a remote user in a small environment, such as an office, to work with teammates in a much larger space via virtual reality. VRoxy is the most recent advancement in remote, robotic embodiment.

When wearing a VR headset, the user has two view modes: For interactions with local collaborators, live mode presents an immersive visual of the collaborative area in real time, whereas navigational mode depicts rendered routes of the room, allowing remote users to “teleport” to where they want to go. This navigation mode allows the remote user to move more quickly and smoothly, reducing motion sickness.

The great benefit of virtual reality is that we can leverage all kinds of locomotion techniques that people use in virtual reality games, like instantly moving from one position to another. This functionality enables remote users to physically occupy a very limited amount of space but collaborate with teammates in a much larger remote environment.

Mose Sakashita

According to the researchers, the system’s autonomous nature allows remote partners to focus entirely on communication rather than physically piloting the robot.

“The great benefit of virtual reality is that we can leverage all kinds of locomotion techniques that people use in virtual reality games, like instantly moving from one position to another,” said Mose Sakashita, a doctorate student in information science at Cornell. “This functionality enables remote users to physically occupy a very limited amount of space but collaborate with teammates in a much larger remote environment.”

“VRoxy: Wide-Area Collaboration From an Office Using a VR-Driven Robotic Proxy,” which Sakashita is the principal author of, will be presented at the ACM Symposium on User Interface Software and Technology (UIST).

VRoxy’s automatic, real-time responsiveness is key for both remote and local teammates, researchers said. With a robot proxy like VRoxy, a remote teammate confined to a small office can interact in a group activity held in a much larger space, like in a design collaboration scenario.

For colleagues, the VRoxy robot automatically mimics the user’s body position and other important nonverbal clues that are lost with telepresence robots like Zoom. For example, depending on the user’s focus, VRoxy’s monitor, which displays a recreation of the user’s face, will tilt accordingly.

It has a 360-degree camera, a monitor that displays the user’s VR headset’s collected facial expressions, a robotic pointer finger, and omnidirectional wheels.

Sakashita plans to enhance VRoxy with robotic arms in the future, allowing remote users to interact with tangible objects in the live space via the robot proxy.