DeepMind’s Open-Ended Learning Team has created a new method for teaching AI systems to play games. Rather than subjecting it to millions of previous games, as is done with other game-playing AI systems, DeepMind’s team has given its new AI system agents a set of minimal skills that they use to achieve a simple goal (such as spotting another player in a virtual world) and then build on it.

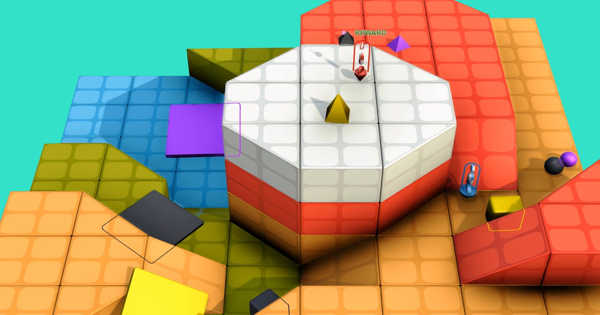

The researchers created XLand, a colorful virtual world that resembles a video game in appearance. In it, AI players, known as agents by the researchers, set out to achieve a general goal, and as they do so, they learn skills that they can use to achieve other goals. The researchers then change the game, giving the agents a new goal while retaining the skills they learned in previous games. The group has published a paper describing their efforts on the arXiv preprint server.

Researchers created a virtual world called XLand—a colorful virtual world that has a general video game appearance. In it, AI players, which the researchers call agents, set off to achieve a general goal, and as they do, they acquire skills that they can use to achieve other goals.

One example of the technique is an agent attempting to reach a part of its world that is too high to climb directly onto and has no access points such as stairs or ramps. While bumbling around, the agent discovers that it can use a flat object it discovers as a ramp to get to where it needs to go. To help their agents learn new skills, the researchers devised 700,000 scenarios or games in which the agents faced approximately 3.4 million distinct tasks. Using this method, the agents were able to teach themselves how to play a variety of games, including tag, capture the flag, and hide and seek. The researchers call their approach endlessly challenging.

Another intriguing aspect of XLand is the existence of a sort of overlord, an entity that monitors the agents and notes which skills they are learning, then generates new games to strengthen their skills. With this approach, the agents will continue to learn as long as new tasks are assigned to them.

The researchers discovered that when the agents were running their virtual world, they learned new skills that they found useful and then built on them, leading to more advanced skills such as resorting to experimentation when they ran out of options, cooperating with other agents, and learning how to use objects as tools. They argue that their method is a step toward developing generally capable algorithms that can learn how to play new games on their own—skills that could one day be used by autonomous robots.

Machine Learning (ML) is maturing, with an increasing recognition that ML can play a critical role in a wide range of critical applications such as data mining, natural language processing, image recognition, and expert systems. ML has the potential to provide solutions in all of these domains and more, and it is poised to be a pillar of our future civilization.

Computational Intelligence (Soft Computing) is a novel approach to advanced information processing. The goal of CI approaches is to realize a new approach to analyzing and creating flexible human information processing such as sensing, understanding, learning, recognizing, and thinking.

Machine Learning is heavily based on statistics. When we train our machine to learn, for example, we must provide it with a statistically significant random sample of data as training data. If the training set is not random, we risk detecting machine learning patterns that do not exist. Furthermore, if the training set is too small, we will not learn enough and may even draw incorrect conclusions.