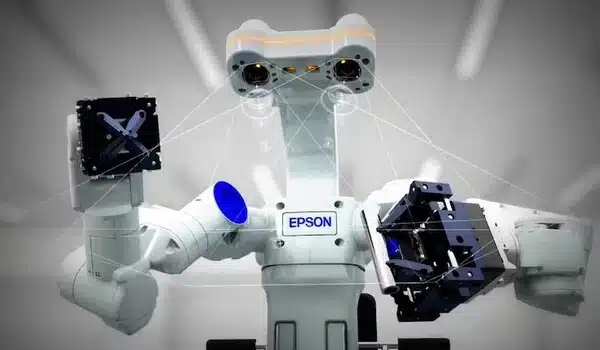

Dual-arm robots that can learn to do bimanual tasks from simulation are a significant achievement in robotics and artificial intelligence. These robots are designed to have two manipulator arms, which allows them to do tasks that require cooperation between both arms.

A revolutionary bimanual robot exhibits tactile sensitivity comparable to human dexterity while employing AI to guide its activities. The novel Bi-Touch technology, developed by scientists at the University of Bristol and housed at the Bristol Robotics Laboratory, enables robots to perform manual jobs by sensing what to do from a digital assistant.

The findings, published in IEEE Robotics and Automation Letters, demonstrate how an AI agent interprets its environment through tactile and proprioceptive feedback and then controls the robots’ behaviors to accomplish robotic tasks such as precise sensing, gentle interaction, and effective object manipulation. This advancement has the potential to transform industries like as fruit harvesting and domestic service, as well as to replicate touch in mechanical limbs.

With our Bi-Touch system, we can easily train AI agents in a virtual world within a couple of hours to achieve bimanual tasks that are tailored towards the touch.

Yijiong Lin

Lead author Yijiong Lin from the Faculty of Engineering, explained: “With our Bi-Touch system, we can easily train AI agents in a virtual world within a couple of hours to achieve bimanual tasks that are tailored towards the touch. More importantly, we can directly apply these agents from the virtual world to the real world without further training. The tactile bimanual agent can solve tasks even under unexpected perturbations and manipulate delicate objects in a gentle way.”

Human-level robot dexterity will require bimanual manipulation with tactile feedback. However, this problem has received less attention than single-arm settings, owing in part to the scarcity of suitable hardware and the difficulties of building effective controllers for tasks with somewhat wide state-action spaces. Using recent developments in AI and robotic tactile sensing, the team was able to create a tactile dual-arm robotic system.

The researchers created a virtual world (simulation) with two robot arms outfitted with touch sensors. They then constructed a real-world tactile dual-arm robot system to which they could directly apply the agent, as well as reward functions and a goal-update mechanism to motivate the robot agents to learn to perform the bimanual tasks.

Deep Reinforcement Learning (Deep-RL), one of the most advanced techniques in the field of robot learning, is used to teach robot bimanual skills. It is intended to educate robots to do things by allowing them to learn via trial and error, similar to how a dog is trained through incentives and punishments.

For robotic manipulation, the robot learns to make judgments by attempting numerous behaviors to accomplish specific objectives, such as lifting objects without dropping or breaking them. It receives a reward when it succeeds, and when it fails, it learns what not to do. It learns the optimal ways to get things by using these rewards and penalties over time. The AI bot is visually impaired and relies solely on proprioceptive feedback (the ability of the body to feel movement, action, and position) and touch feedback.

They were able to successfully enable the dual arm robot to lift things as delicate as a single Pringle crisp.

“Our Bi-Touch system showcases a promising approach with affordable software and hardware for learning bimanual behaviors with touch in simulation, which can be directly applied to the real world,” co-author Professor Nathan Lepora remarked. Because the code for our built tactile dual-arm robot simulation will be open-source, it will be perfect for building new downstream tasks.”

“Our Bi-Touch system enables a tactile dual-arm robot to learn from simulation and perform various manipulation tasks in a gentle manner in the real world,” Yijiong concluded. And now, we can quickly train AI agents in a simulated world in a matter of hours to perform bimanual activities that are tuned to the touch.”