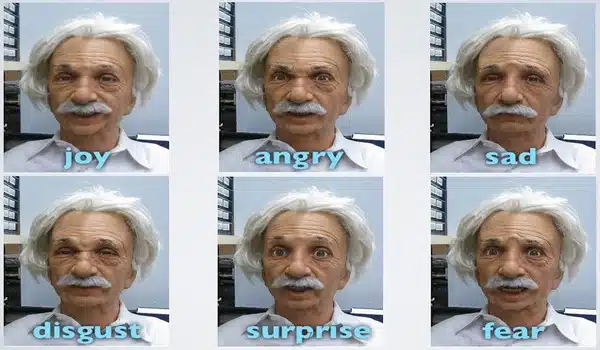

Teaching androids to smile entails teaching them to recognize and respond to facial expressions. A team of researchers used 125 physical markers to study the mechanics of 44 different human facial motions. The goal was to gain a better understanding of how to convey emotions using artificial faces. This research can benefit computer graphics, facial recognition, and medical diagnoses in addition to helping with the design of robots and androids.

Robots that can express human emotions have long been a staple of science fiction stories. To bring those stories closer to reality, Japanese researchers have been studying the mechanical details of real human facial expressions.

In a recent study published by the Mechanical Engineering Journal, a multi-institutional research team led by Osaka University have begun mapping out the intricacies of human facial movements. The researchers used 125 tracking markers attached to a person’s face to closely examine 44 different, singular facial actions, such as blinking or raising the corner of the mouth.

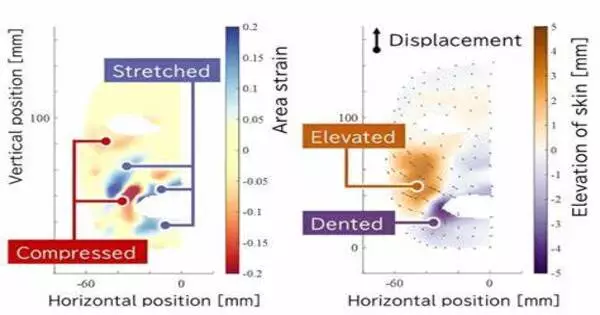

The facial structure beneath our skin is complex. The deformation analysis in this study could explain how sophisticated expressions, which comprise both stretched and compressed skin, can result from deceivingly simple facial actions.

Akihiro Nakatani

As muscles stretch and compress the skin, every facial expression causes a variety of local deformations. Even the most basic movements can be surprisingly complex. Our faces contain a variety of tissues beneath the skin, ranging from muscle fibers to fatty adipose, all of which work together to convey how we’re feeling. This can range from a broad smile to a slight raise of the corner of the mouth. This level of detail is what makes facial expressions so subtle and nuanced, making artificial replication difficult. This has previously relied on much simpler measurements of the overall face shape and motion of points chosen on the skin before and after movements.

“Our faces are so familiar to us that we don’t notice the fine details,” explains Hisashi Ishihara, the main author of the study. “But from an engineering perspective, they are amazing information display devices. By looking at people’s facial expressions, we can tell when a smile is hiding sadness, or whether someone’s feeling tired or nervous.”

Information gathered by this study can help researchers working with artificial faces, both created digitally on screens and, ultimately, the physical faces of android robots. Precise measurements of human faces, to understand all the tensions and compressions in facial structure, will allow these artificial expressions to appear both more accurate and natural.

“The facial structure beneath our skin is complex,” says Akihiro Nakatani, senior author. “The deformation analysis in this study could explain how sophisticated expressions, which comprise both stretched and compressed skin, can result from deceivingly simple facial actions.”

This research has applications beyond robotics, such as improved facial recognition or medical diagnoses, which currently rely on doctor intuition to detect abnormalities in facial movement.

This study has only looked at one person’s face so far, but the researchers hope to use their findings as a springboard to gain a better understanding of human facial motions. In addition to assisting robots in recognizing and conveying emotion, this research could aid in the improvement of facial movements in computer graphics, such as those used in movies and video games, thereby avoiding the dreaded ‘uncanny valley’ effect.