Tactile sensation is a critical component of how humans perceive their surroundings. Haptics, or devices that can produce extremely specific vibrations that mimic the sensation of touch, are one way to bring that third sense to life. However, as far as haptics has progressed, humans are extremely picky about whether or not something feels ‘right,’ and virtual textures don’t always deliver.

Researchers have now developed a new method for computers to achieve that true texture – with the help of humans. The framework, known as a preference-driven model, uses our ability to distinguish between the details of different textures as a tool to tune up these virtual counterparts.

Technology has allowed us to immerse ourselves in a world of sights and sounds from the comfort of our home, but there’s something missing: touch.

Tactile sensation is a critical component of how humans perceive their surroundings. Haptics, or devices that can produce extremely specific vibrations that mimic the sensation of touch, are one way to bring that third sense to life. However, as far as haptics has progressed, humans are extremely picky about whether or not something feels “right,” and virtual textures don’t always hit the mark.

Now, researchers at the USC Viterbi School of Engineering have developed a new method for computers to achieve that true texture – with the help of humans. The framework, known as a preference-driven model, uses our ability to distinguish between the details of different textures as a tool to tune up these virtual counterparts.

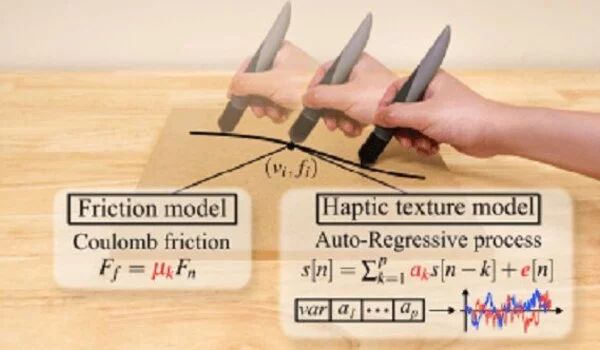

We ask users to compare their feelings between the real texture and the virtual texture. The model then iteratively updates a virtual texture so that the virtual texture eventually matches the real one.

Shihan Lu

The research was published in IEEE Transactions on Haptics by three USC Viterbi Ph.D. students in computer science, Shihan Lu, Mianlun Zheng and Matthew Fontaine, as well as Stefanos Nikolaidis, USC Viterbi assistant professor in computer science and Heather Culbertson, USC Viterbi WiSE Gabilan Assistant Professor in Computer Science.

“We ask users to compare their feelings between the real texture and the virtual texture,” explained Lu, the first author. “The model then iteratively updates a virtual texture so that the virtual texture eventually matches the real one.”

According to Fontaine, the idea came up during a Haptic Interfaces and Virtual Environments class taught by Culbertson in the Fall of 2019. They were inspired by the art application Picbreeder, which can generate images based on a user’s preferences over and over until it achieves the desired result.

“We thought, what if we could do that for textures?” Fontaine recalled.

The user is given a real texture first, and the model generates three virtual textures at random using dozens of variables, from which the user can choose the one that feels the most similar to the real thing. The search adjusts the distribution of these variables over time as it gets closer to what the user prefers. This method has an advantage over directly recording and “playing back” textures, according to Fontaine, because there is always a gap between what the computer reads and what we feel.

“You’re measuring parameters of exactly how they feel it, rather than just mimicking what we can record,” Fontaine said. There’s going to be some error in how you recorded that texture, to how you play it back.”

The only thing the user has to do is choose what texture matches best and adjust the amount of friction using a simple slider. Friction is essential to how we perceive textures, and it can vary between the perceptions of person to person. It’s “very easy,” Lu said.

Their work comes just in time for the emerging market for specific, accurate virtual textures. Everything from video games to fashion design is integrating haptic technology, and the existing databases of virtual textures can be improved through this user preference method.

“There is a growing popularity of the haptic device in video games and fashion design and surgery simulation,” Lu said. “Even at home, we’ve started to see users with those (haptic) devices that are becoming as popular as the laptop. For example, with first-person video games, it will make them feel like they’re really interacting with their environment.”

Lu has previously worked on immersive technology, but this time with sound, specifically to make the virtual texture more immersive by introducing matching sounds when the tool interacts with it.

“When we use a tool to interact with the environment, tactile feedback is only one modality, one type of sensory feedback,” Lu explained. “Audio is another type of sensory feedback, and both are critical.”

The texture-search model also allows users to take a virtual texture from a database, such as the University of Pennsylvania’s Haptic Texture Toolkit, and refine it until they achieve the desired result.

“You can use the previous virtual textures searched by others, and then continue tuning it based on those,” Lu explained. “You don’t have to start from scratch every time.” According to Lu, this is especially useful for virtual textures used in training for dentistry or surgery, which require extreme accuracy.

“Surgical training is definitely a huge area that requires very realistic textures and tactile feedback,” Lu said. “Fashion design also necessitates a great deal of precision in texture development before they go and fabricate it.”

Real textures may not even be required for the model in the future, according to Lu. The way certain things in our lives feel is so intuitive that fine-tuning a texture to match that memory is something we can do inherently just by looking at a photo, without having the real texture for reference in front of us.

“When we see a table, we can imagine how it will feel once we touch it,” Lu explained. “Using the prior knowledge we have of the surface, you can simply provide visual feedback to the users, allowing them to choose what matches.”