The technology of making deepfakes is becoming more realistic and sophisticated over time. It can use for harmful purposes – however, the damage they can do with the wrong hands is very real.

This is why tools to detect these fake faces are necessary. Fortunately, a new study published in preprint server RXIV has shown a way to detect AI-generated faces in their deeper vision. deepfakes, produced by the Generator Adversary Network (GAN) – two neural networks work to create realistic images against each other, one to create, the other to evaluate – usually in a portrait setting, with the eye looking directly at the camera. The authors of the paper think that this may be due to the actual images that GAN trained. With real faces in this kind of setting, two eyes are reflecting the same light environment.

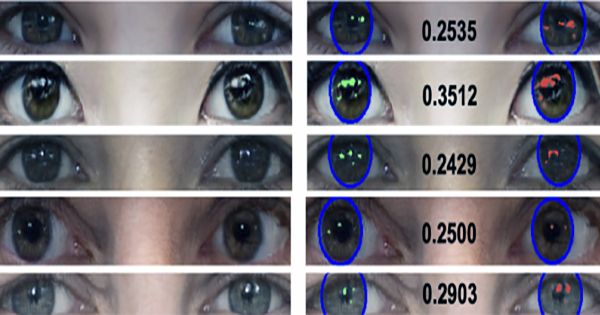

Explained lead author of the paper Professor Siwei Lyu in a statement, “The cornea almost like a perfect semicircle and is very reflective.” “Two eyes should have very similar reflective patterns because they are seeing the same thing. It is something we do not usually notice when we look at a face.” However, researchers have noticed an “interesting” difference between the two eyes in the faces produced by AI. Trained in faces produced by these thispersondoesnotexist and Flickr Face-HQ, their method maps the face, then zooms and examines the eye and then the eyeballs and then the light reflected in each.

Differences between images such as size and light intensity then compared. “Our experiments show that there is a clear division between the distribution of match scores of real and GAN synthetic faces, which can be used as a quantitative feature for their differences,” the authors write in the paper. With samples of portrait photos, the tool was 94 percent effective except for fake and real faces.

They think that the differences in eye reflection are due to the lack of physical and physiological restraint in the GAN models as well as the images may be a combination of many different photos. However, the method is not in the portrait setting or created a false positive source in the photo with a light source very close to the eye. They further emphasize that editing in a similar eye image, deepfakes can further driven. This type of tool can help in tracking fake accounts and spreading misinformation to help identify AI-generated faces.

“GAN-synthesized faces have crossed the untidy valley ’and are challenging to differentiate from real human face images; they are quickly becoming a new form of online isolation. In particular, gen-synthesized faces have been used as a profile picture of fake social media accounts to entice or deceive unknown users, “the author writes.”There are also potential political implications,” Professor Liu explained. “Politicians in fake videos are saying or doing things they shouldn’t be doing. It’s bad.”