HSE International Laboratory for Supercomputer Atomistic Modeling and Multi-scale Analysis, JIHT RAS, and MIPT researchers compared the performance of popular molecular modeling programs on AMD and Nvidia GPU accelerators. For the first time, the researchers ported LAMMPS to the new open-source GPU technology, AMD HIP, in a paper published in the International Journal of High-Performance Computing Applications.

The researchers thoroughly examined the performance of three molecular modeling programs—LAMMPS, Gromacs, and OpenMM—on Nvidia and AMD GPU accelerators with comparable peak parameters. They used the ApoA1 (Apolipoprotein A1) model for the tests—apolipoprotein in blood plasma, the main carrier protein of “good cholesterol.” They discovered that research calculation performance is influenced not only by hardware parameters, but also by the software environment. It was discovered that in complicated scenarios of parallel launch of computing kernels, ineffective AMD driver performance can cause significant delays. Open-source solutions continue to have drawbacks.

In a paper published by the International Journal of High-Performance Computing Applications, the scholars ported LAMMPS on the new open-source GPU technology, AMD HIP, for the first time.

The researchers were the first to port LAMMPS to a new open-source GPU technology, AMD HIP, in their recently published paper. This emerging technology appears to be very promising because it enables the use of a single code on both Nvidia accelerators and AMD’s new GPUs. The developed LAMMPS modification has been released as open-source and can be found in the official repository; users from all over the world can use it to speed up their calculations.

“The GPU accelerator memory sub-systems of the Nvidia Volta and AMD Vega20 architectures were thoroughly analyzed and compared. I discovered a difference in the logic of the GPU kernel parallel launch and demonstrated it by visualizing the program profiles. Memory bandwidth, latencies of different levels of GPU memory hierarchy, and effective parallel execution of GPU kernels—all of these factors have a significant impact on the real performance of GPU programs “said Vsevolod Nikolskiy, a doctoral student at HSE University and one of the paper’s authors.

The authors of the paper argue that participation in the technological race of today’s microelectronics behemoths demonstrates a clear trend toward a broader range of GPU acceleration technologies.

“On the one hand, this is a good thing for end-users because it encourages competition, increasing effectiveness, and decreasing the cost of supercomputers.” On the other hand, because of the availability of several different types of GPU architectures and programming technologies, it will be even more difficult to develop effective programs,” said Vladimir Stegailov, an HSE University professor. “Even supporting program portability for ordinary processors on various architectures (x86, Arm, POWER) is frequently difficult.” Program portability between different GPU platforms is a much more complicated issue. The open-source paradigm eliminates many barriers and helps the developers of big and complicated supercomputer software.”

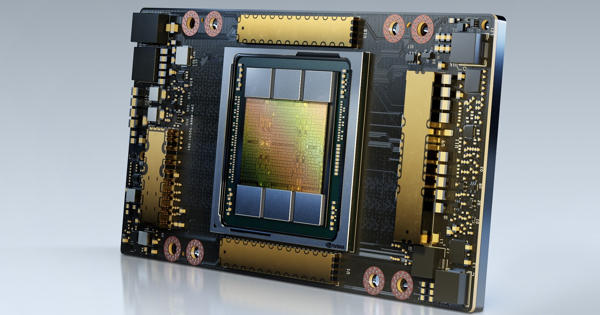

The market for graphic accelerators was experiencing a growing deficit in 2020. The most common applications for them are well-known: cryptocurrency mining and machine learning tasks. Meanwhile, GPU accelerators are required for the mathematical modeling of new materials and biological molecules in scientific research.

“Developing powerful supercomputers and fast and effective programs is how tools for solving the most complex global challenges, such as the COVID-19 pandemic, are prepared. Today, computational tools for molecular modeling are being used all over the world to look for ways to combat the virus “said Nikolay Kondratyuk, an HSE University researcher and one of the paper’s authors.

The most important mathematical modeling programs are created by international teams of researchers from dozens of institutions. Development is done in accordance with the open-source paradigm and under free licenses. The competition between two modern microelectronics behemoths, Nvidia and AMD, has resulted in the emergence of AMD ROCm, a new open-source infrastructure for GPU accelerator programming. The open-source nature of this platform raises the prospect of maximum portability of codes developed with its help to various types of supercomputers. This strategy differs from Nvidia’s, whose CUDA technology is a closed standard.

It didn’t take long for the academic community to respond. The largest new supercomputer projects based on AMD GPU accelerators are nearing completion. The Lumi, which has 0.5 exaFLOPS of performance (equivalent to 1,500,000 laptops! ), is being built quickly in Finland. A more powerful supercomputer, Frontier (1.5 exaFLOPS), is expected in the United States this year, and an even more powerful El Capitan (2 exaFLOPS) is expected in 2023.