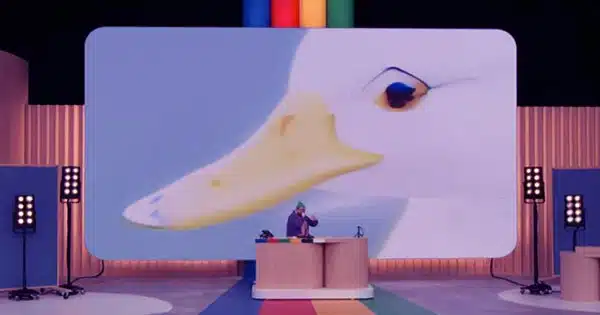

In addition to hardware announcements like the Pixel Fold and 7a, the Google I/O 2023 developer conference in Mountain View, California, focused heavily on AI. Google sought to demonstrate that it was winning the AI race with everything from image production in Bard to generative AI wallpapers and AI-enhanced prose authoring.

One of the big developments for art and design is the integration of Adobe’s AI image generator Firefly into Google Bard (if you need to brush up on your AI skills, check out our list of the best AI art tutorials). Thankfully, Google also introduced new technology to assist in identifying the massive amount of AI photos being made. Everything you need to know is right here.

Adobe Firefly brings AI art to Google Bard

Adobe made a significant announcement at Google I/O. Adobe Firefly text-to-image generators are being integrated into Bard, Google’s experimental conversational AI service. Users will be able to describe their desired image and have it generated straight in Bard. The AI images can then be modified or used to create designs in Adobe Express.

Adobe(opens in new tab) said it will employ the open-source Content Credentials technology developed by the Content Authenticity Initiative (CAI) to assure image transparency. The project’s goal is to enable people to tell if the information was authored by a person, generated by AI, or altered by AI. All AI-generated photographs will be labeled with metadata that identifies them as such.

The announcement follows Microsoft’s disclosure last year of plans to bring picture production to Bing, but the combination with Firefly and Adobe Express is likely to provide consumers more power. Bard will be integrated into Google products such as the search engine, Docs, Lens, Photos, and Maps, allowing content creation in these apps as well as providing suggestions, lists, and search assistance.

The veracity of AI photographs

On the subject of image authenticity, Google made a point of emphasizing the necessity of accountability while using and creating content with artificial intelligence during I/O. It revealed that users would be able to ask Google Lens to check the authenticity of photographs and that it is working on tools for adding watermarks and warnings.

Also at Google I/O, the tech giant said that Android users will be able to use Generative AI Wallpaper to create unique lock screens beginning this autumn. Users will be able to choose from a range of text prompts by tapping ‘make a wallpaper with AI’. Based on the new lock screen, the remainder of the phone’s UI color palette will be updated.

Google emphasizes that the algorithm was trained on photographs in the public domain, assuring that no copyright is violated. It also intends to release personalized ’emoji’ and ‘cinematic’ backgrounds for Pixel devices as early as next month.

Google also revealed text-based AI tools. Google CEO Sundar Pichai revealed Magic Compose during his keynote talk, which would be able to recommend adjustments to text messages in order to achieve a desired tone of voice. The technology will be available in beta this summer and may be accessed via a magic wand icon in Google’s messaging app.

“Our bold and responsible approach to AI can unlock people’s creativity and potential,” Pichai asserted. “We also want to make sure that this helpfulness reaches as many people as possible.”

achieve you want to know what you can achieve with AI art? See our picks for the most bizarre AI art of 2023 thus far. Check out the reaction to the recent Google logo change for more Google news.