Early development of a photonic processor has been seen by a team of foreign scientists using tiny light rays confined within silicone chips that can process information even faster than electronic chips and in parallel–something that conventional chips are incapable of doing.

In the modern era, data traffic is rising at an exponential rate. The need for computing resources for applications in the field of artificial intelligence, such as pattern and speech recognition in particular, or for self-driving cars, sometimes exceeds the capability of traditional computer processors. The rapid growth of data traffic in our modern era presents some real challenges to computing capacity. With the introduction of machine learning and artificial intelligence (AI) in, for example, self-driving cars and speech recognition, the upward trend is likely to continue. All of this puts heavy pressure on the capacity of existing computer processors to keep up with demand.

The researchers from the Swiss Federal Institute of Technology have developed a new light-based approach to combine processing and data storage onto a single chip.

Working together with an international team, researchers at the University of Münster are designing innovative methods and process architectures that can cope with these challenges incredibly efficiently. They have now demonstrated that so-called photonic processors, in which data is processed by way of light, can process information even quicker and in parallel—something that electronic chips are incapable of doing.

Background and methodology

Light-based processors for speeding up tasks in the field of machine learning enable complex mathematical tasks to be processed at enormously fast speeds (10¹² -10¹⁵ operations per second). Conventional chips such as graphic cards or specialized hardware like Google’s TPU (Tensor Processing Unit) are based on electronic data transfer and are much slower. The team of researchers led by Prof. Wolfram Pernice from the Institute of Physics and the Center for Soft Nanoscience at the University of Münster implemented a hardware accelerator for so-called matrix multiplications, which represent the main processing load in the computation of neural networks. Neural networks are a series of algorithms that simulate the human brain. This is helpful, for example, for classifying objects in images and for speech recognition.

Once the chips were developed and manufactured, the researchers used the neural convolution network to identify handwritten numbers. These networks are a term in the field of machine learning that is influenced by biological processes. Used mainly in the analysis of image or audio files, they currently have the best classification accuracy.

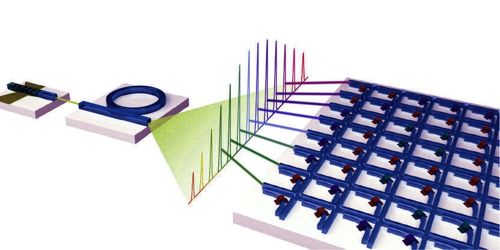

Researchers also merged photonic structures with phase-change materials (PCMs) as energy-efficient storage components. PCMs are typically used for DVDs or BluRay disks for optical data collection. This makes it possible to store and retain matrix elements in the new processor without the need for energy supply. The Münster physicists used a chip-based frequency comb as a light source to perform matrix multiplications on several data sets in parallel.

The frequency comb produces a range of optical wavelengths that are stored independently of each other in the same photonic chip. As a result, this allows massively parallel data processing by measuring all wavelengths simultaneously—also known as wavelength multiplexing. “Our study is the first one to apply frequency combs in the field of artificial neural networks,” says Wolfram Pernice.

In the experiment, the physicists used the so-called convolutionary neural network for the identification of handwritten numbers. These networks are a term in the field of machine learning that is influenced by biological processes. They are used mainly in the analysis of image or audio files since they currently attain the highest classification accuracy. “The convolutional operation between input data and one or more filters – which can be a highlighting of edges in a photo, for example – can be transferred very well to our matrix architecture,” explains Johannes Feldmann, the lead author of the analysis.

“Exploiting light for signal transfer allows the processor to conduct parallel data processing by wavelength multiplexing, which leads to greater computational density and multiple matrix multiplications in only one moment. In comparison to conventional electronics, which typically run within a low GHz range, optical modulation rates can be accomplished at speeds up to 50 to 10.

The findings of the survey have a wide variety of uses. For example, in the field of artificial intelligence, more data may be processed concurrently while saving resources. The use of broader neural networks makes for more precise and, to date, unattainable predictions, and more accurate data processing. Photonic processors, for example, enable the evaluation of vast volumes of data in medical diagnostics, e.g. in high-resolution 3D data provided by special imaging techniques.

The study is published in Nature and has far-reaching applications: higher simultaneous (and energy-saving) data processing in AI, larger neural networks for more accurate predictions and more accurate data analysis, large amounts of clinical diagnostic data, enhanced rapid evaluation of sensor data in self-driving vehicles and expanded cloud computing infrastructure with more storage space.